Use Kafka with Flume - CRS2

Create by Junyangz AT 2018-08-01 10:53:46 based on dmy's docs.

Last edited by Junyangz AT 2018-08-01 13:32:51.

Flume

Intoduction Flume

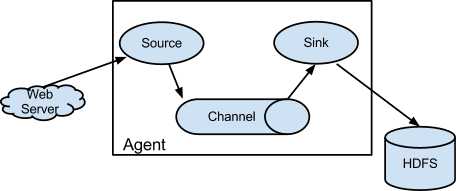

Flume is a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of log data. It has a simple and flexible architecture based on streaming data flows. It is robust and fault tolerant with tunable reliability mechanisms and many failover and recovery mechanisms. It uses a simple extensible data model that allows for online analytic application.

安装

安装前准备

安装jdk

解压

配置及运行

Use the Kafka sink to send data to Kafka from a Flume source.refer-doc

添加PATH环境变量

conf/flume-env.sh

conf/spool1-kafka.properties 重要配置参数:

conf/spool[2-n]-kafka.properties同上

启动

Kafka

Intoduction Kafka

Kafka® is used for building real-time data pipelines and streaming apps. It is horizontally scalable, fault-tolerant, wicked fast, and runs in production in thousands of companies.

Kafka安装

安装前准备

安装jdk

启动zookeeper

解压

配置Kafka

添加PATH环境变量

config/server.properties

bin/kafka-server-start.sh

添加以下代码,开启 JMX(便于监控):

修改上面的 Java 设置:

测试机上目前的配置如下:

-Xmx6g -Xms6g -XX:PermSize=128m -XX:MaxPermSize=256m

LinkedIn 的 Java 配置:

-Xmx6g -Xms6g -XX:MetaspaceSize=96m -XX:+UseG1GC -XX:MaxGCPauseMillis=20 -XX:InitiatingHeapOccupancyPercent=35 -XX:G1HeapRegionSize=16M -XX:MinMetaspaceFreeRatio=50 -XX:MaxMetaspaceFreeRatio=80

启动Kafka

Kafka 集群中的节点要关闭防火墙,不然会报如下错误:

Error in fetch kafka.server.ReplicaFetcherThread$FetchRequest@5b1413a8 (kafka.server.ReplicaFetcherThread) java.io.IOException: Connection to client3:9092 (id: 1 rack: null) failed

Kafka Manager

A tool for managing Apache Kafka. https://github.com/yahoo/kafka-manager

安装前准备

安装 sbt,jdk8

想要看到读取、写入速度,kafka 需要开启 JMX

下载

编译

由于需要的环境是 Java 8+, 如果 java 不在环境变量中,在编译和运行时需要指定 Java 8+

解压

编译好的包在 kafka-manager/target/universal 中,将其移动到指定目录进行解压。

配置

conf/application.conf

启动

编写启动脚本:

启动:

使用

Web访问9000端口

创建 cluster

配置 cluster:输入Zookeeper Hosts,选择Kafka版本,打开JMX Polling

Reference

2.dmy

Last updated

Was this helpful?